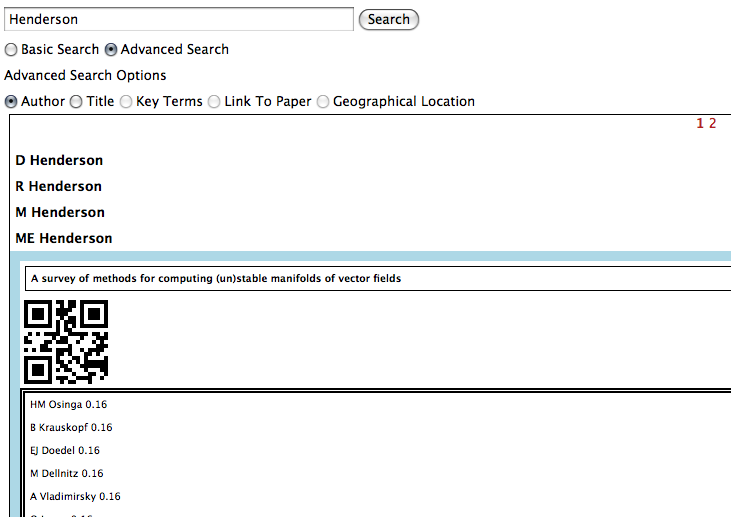

- Screenshots or diagram of prototype:

Searching for a person

Choosing an individual

Viewing information about them and people who ‘write like them’ - Description of Prototype: Explore people, publications, institutions and themes through oai metadata

- End User of Prototype: “Jonathan is a researcher in evolutionary linguistics. He has become very interested in possible mathematical mechanisms for describing the nature, growth and adaption of language, as he has heard that others, such as Partha Nyogi, have done some very interesting work in this area. Unfortunately, Jonathan is not a mathematician and finds that some of the detail is hard to follow. He realises that what he really needs to do is either to go to the right sort of event or the right sort of online forum and find some people who might be interested in exploring links between his specialist area and their own. Both of these are difficult in their own ways. To go to the right sort of event would mean identifying what sort of event that would be, and he does not have enough money to go to very many. So he chooses to look up possible events and web forums, thinking that he can look through the participant lists for names that he recognises. This is greatly simplified by a system that uses information about the papers and authors that he considers most relevant; with this information it is able to parse through lists of participants in events or online communities in order to provide him with a rough classification of how relevant the group is likely to be to his ideas.”

- Link to working prototype: writeslike.us

- Link to end user documentation: http://www.ukoln.ac.uk/projects/writeslike.us

- Link to code repository or API: http://code.google.com/p/writeslikeus/

- Link to technical documentation: http://www.ukoln.ac.uk/projects/writeslike.us (TBA)

- Date prototype was launched: Dec 01 2009

- Project Team Names, Emails and Organisations: Emma Tonkin, e.tonkin@ukoln.ac.uk, UKOLN; Alexey Strelnikov, a.strelnikov@ukoln.ac.uk, UKOLN, Andrew Hewson, a.hewson@ukoln.ac.uk, UKOLN

- Project Website: http://code.google.com/p/writeslikeus/

- PIMS entry: https://pims.jisc.ac.uk/projects/view/1263

- Table of Content for Project Posts: TBA

Tag Archives: rapidInnovation

Value Add

Probably the most important thing I discovered in this project was the importance of ‘crowdsourced’ data in filling in the gaps between metadata and common knowledge.

The availability of Wikipedia as a source of random information, although much of it contains inadequate structure to search through with something like dbpedia, is a very important factor for us in improving the metadata and the data that we are putting together to support usage of that metadata. It’s not perfect of course – or perhaps it’s better to say that the imperfect and rough ways in which we use the data are not able to achieve the sorts of results that one might like – but it seems obvious that it’s an invaluable resource for the future.

Other data sources have been invaluable for us as well, particularly DBLP, despite the strong focus on computer science (which, however, means that for training across domains we should probably be looking elsewhere too 🙂 )

Finally, social tags have been less effective for our purposes than one might imagine for one reason, which is that there aren’t an awful lot of them around, and those that are need to be detected by a relatively complex process of resolving title/author into the most popular mirror URI(s).

We’ll be publishing some of the extracted data shortly – boring but useful stuff like lists of institutions, urls, coordinates, enhanced metadata, etc – so hopefully it will come in useful to others!

writeslike.us: Wins and Fails

Wins:

➢ Getting information such as institution names/URLs from Wikipedia, and widespread use of available web services in general

➢ Extracting names from OAI-DC was easier than expected – although there are still issues with identifying name pair order.

➢ Evidence based learning methods can be applied successfully to the data retrieved to enhance it – getting into FixRep territory. The project has been very useful for the purpose of establishing further use cases for ‘cleaning up’ metadata.

➢ Some interesting work in name / identity disambiguation through statistical clustering analysis. We’re looking at linking extracted info together with formal information such as that made available by the NAMES project.

➢ Storyboards defining the workflow of the system form an effective part of the agile development process, and were very useful for us.

➢ Using an SQL db as the repository was effective once problems with slow queries was addressed through: normalizing data, reviewing db schema design, adding indexes as necessary.

Fails:

➢ Natural Language Tool Kit – didn’t use it for its original purpose. Instead, went back to the Tree Tagger, although this was not specifically trained for the sort of technical document we were analysing.

➢ Text analysis expertise required for this project wasn’t already extant in the team. It would’ve been a good idea to have ensured training for team to make sure we were all on the same page!

➢ Ensure all related documents, URIs, etc, are contained/linked in the project wiki.

➢ Cultural mismatch between research approach to defining requirements/expectations and development requirements/expectations. e.g. who writes the formal requirements document?

➢ Earlier storyboard scenario development would have been helpful, so a good lesson for next time.

➢ Swine flu and its effects were quite severe on this project – our Portugese collaborators were unavailable for quite some time due to a) the danger of traveling to the UK and contracting the virus, and (subsequently to contracting the illness in Portugal) b) the effects of the illness!

Technical standards

In our project (Writeslike.us) we are using a number of different techniques and tools to make things done.

Coding

As main development languages scripting languages Perl and Python were selected. Python is good for text parsing because language features and external library – Natural language toolkit (NLTK.org) which allow to stem and tag text. The text parsing is non-trivial tasks so for that purpose NLTK use heuristic approach and also offer a training data set for parser to be trained. Perl is native choice for development under Linux and it has powerful set of libraries in CPAN system. In particular XML parsing and full text document crawling functions were written in Perl.

As development tools we are using Eclipse IDE with Perl and Pydev for Perl and Python respectively. In some cases VIM featured text editor is in use. Using such heterogeneous tools implies that project file structure should be flat and simple.

Infrastructure

For running and hosting we are using such proven tools as MySQL and Apache. Subversion (SVN) is used to store and keep track of software versions.

Architecture

The calculation we need to perform to build authors relations network is too resource-intensive to be performed on demand, therefore we need to generated and store database tables with pre-calculated data as some sort of caching approach. Since our project is re-using existing metadata (in Dublin Core (oai-dc, often from qualified DC)) from a repository we were required to add to original project feature list also XML parser and document full text crawler functions.

In order increase usability of the project offered functionality we created a REST interface which enable machine2machine interface. The interface enabled in both directions, i.e. for adding new information about person or publication and for making query to find peers.

Further development plans adding automatic metadata extraction servers to improve quantity and quality of data or to extract further information (FixRep, paperBase).

What programming languages we use and why we love it/them -technologies, standards, frameworks that make our lives easier (or harder).

In actual fact there are rather a lot of these! In the case of writeslike.us we have stuck to scripting languages, in particular to Perl and Python. Each of these have their benefits and their issues. Arguments centre around Python’s semantic whitespace versus Perl’s line-noise pseudo-ppp transmission ‘write-once, read never’ look, for example. However, both come with a great variety of extensions and libraries. The ‘killer app’ for Python was NLTK, and once one is used to Perl’s CPAN, it becomes indispensable for certain tasks. In the end, arguing about which language is better is pointless, even though it is great fun. The Computer Science department presently teaches Python, and as such Python is the language with which most CS students are more familiar, whilst EE students seem to be either Perl or Matlab according to recent evidence.

In the end, the important point is that prototypes are developed quickly and easily, and that the techniques and datasets underlying them are well understood. If this is the case, then the rest can usually be adapted to suit – rapid development is not the same thing as throwaway prototyping, but rationalisation of software platforms and standards can very well be part of evolutionary prototype enhancement.

Day-to-day work

1.scrum meeting + meeting minutes

- see the progress made by what people present – what they did yesterday, what they expect to do today, and what worked for them. Allow the manager to keep track of how the project development is progressing and how the team are performing.

- find and solve potential problems before they become significant or expensive!

2. collaboration on code

- Allows developers to learn from each others’ expertise; permits peer-review of code (extreme programming-style?) and review of functionality.

- A long-term goal for this approach is to encourage developers to share code, to think of it as ‘our code’ rather than ‘my code’ and to be more open to review, reuse, constructive criticism, etc.

3. moving code between machines & testing code on different workstations

- A single functional installation does not mean that a development project is finished, since it may be very difficult to set up on other platforms, to understand or to reuse.

- It should be functionally portable and include all necessary libraries, scripts, datasets and configuration to promote remote development, reuse and external contribution to the codebase.

- This also encourages review and testing since it tends to highlight any difficulties with installation and use of newly developed components.

Active collaboration and a flexible approach to development in particular tend to optimise productivity, in that time spent coding also has a knowledge sharing component – and there is relatively little time spent becoming familiar with code before beginning to contribute.

SWOT Analysis: writeslike.us

Strengths

Good knowledge of data source aggregation and normalisation

Agile rapid prototype development and evaluation

Links to IEMSR, FixRep, Aggregator: internal resources – strong support network

Weaknesses

Staff time is limited

Various programming languages in use

Opportunities

Lots of interest in auto-extraction of community networks data

University of Minho now have funding to take work forward

University of Leiden interest in specific technical tagging

Learning from NAMES and enhancing other existing services like NAMES

FixRep stuff: data provenance, evaluation, quality assurance

Establishment of user community

Threats

Reliance on external services/data

Quality of source data and availability of source data is variable

User community not firmly established

Writeslike.us project meeting to generate ideas about the project approach

The problem is unsolvable without additional information identifying the person. But for the case when this information is unavailable, there is a suggestion to apply pure statistics. In other words, evaluate for a testing (sample) data set what error level is linked to both possibilities – two different persons or one, just publishing in two places.

Then we switched to looking at practical methods to use within the Writeslike.us project, to identify individuals who write similarly or about similar topics.

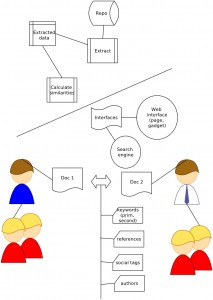

This lovely diagram displays how the system we’re building will do the ‘magic’. Since none of the dimensions available to us are able to discriminate identity alone, we need to bring several onto the stage. The result will be an approximation incorporating evidence from several sources, which will hopefully make it more precise.

The table is just a representation of the way in which the heap of raw data will be mapped into something useful.

Welcoming Wei Jiang to writeslike.us

A new staff member (casual staff) has just begun work, specifically on the Writeslike.us project. He is very experienced in designing and implementing web portals, familiar with machine learning concepts and enthusiastic about project research area – constructing a graph of relations between researchers based on analysis of their papers.

Here is his home page

Created a mind map for the Writeslike.us project direction

Here is a mind map of system architecture and idea. How to read it:

- From the Repo extract the metainformation

- save it to the internal database

- then use a process to determine, who else is in this author’s community?

- the process may have a variety of interfaces (a gadget for Repository Interface – a list of authors in the same community; or it could be search engine saying: Authors from the same community also wrote those papers)

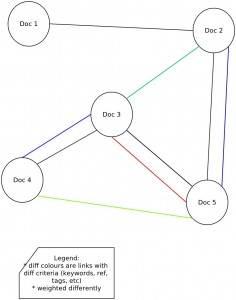

Documents are analysed by a number of dimensions (could be weighted) like used keywords, bibliographical references and social tags.

Here is a data model, let’s say documents are related to others by those dimensions (draw with different colours in the diagram), then the process calculate relation ‘value’ by browsing particular document links.